Anastasiia Vasylieva, Lviv Polytechnic National University, Lviv, Ukraine

Roman Melnyk, Lviv Polytechnic National University, Lviv, Ukraine

Abstract: Cloud types classification is a kind of image classification problem, which can be solved using supervised and unsupervised machine learning methods and artificial neural networks. In this research, the convolutional neural network was proposed to classify cloud images captured by the NOAA-20 Visible Infrared Imaging Radiometer Suite (VIIRS) satellite. CNN model successfully classifies four cloud types: Cirrus, Cumulonimbus, Cumulus, and Stratocumulus. Experiment results show that the proposed method has better accuracy of cloud classification than other machine learning methods, such as Random Forest, K-Nearest Neighbours, and Decision Trees algorithms.

Keywords: satellite imagery, cloud classification, convolutional neural network.

Introduction. Today, cloud recognition and interpretation using satellite imagery play a significant role in meteorological monitoring, climate, and weather forecasting. The obtained data can detect wildfires, dust storms, volcanic emissions, and icebergs. Another application of satellite images is regular monitoring of the surface and sea temperature with the use of different types of images. Classification of cloud types on satellite imagery can help to detect the amount of precipitation and track storms, turbulence, or instability. At present, manual cloud interpretation is widely used to solve the problem of the classification of cloud images. But this method has certain disadvantages related to the quality of human recognition of cloud images and the complex and time-consuming processing of large data sets. Therefore, suitable approaches for solving this problem are supervised and unsupervised machine learning methods and artificial neural networks [1].

The purposes of the research are analyzing existing deep learning methods for image recognition and processing, creating an artificial neural network for cloud classification based on satellite images, and estimating its accuracy.

Analysis of the existing approaches. The most common unsupervised machine learning methods for cloud classification are the threshold method, the cluster analysis method, and the histogram method. The threshold method was successfully applied for cloud features extraction, and then for the classification of clouds into low-level, mid-level and high-level clouds using MTSAT data [2]. To improve threshold determination the histogram method was proposed, which allows to build a histogram of a satellite image to obtain the distribution of cloud colors and determine the threshold for distinguishing the type of cloud [3, 4]. Another method that was applied for cloud classification is K-means clustering, which allows to classify clouds according to the principle of minimum distance. Ming Lee et al. [5] used this method to classify ground-based cloud images into high-level, medium-level, and low-level clouds. The result of this classification was later used as input data for a system that dynamically determines the amount of precipitation, as well as the influence of low-level clouds on the amount of precipitation. The K-means clustering method was also used to classify clouds on satellite imagery of the infrared channels of the geostationary operational environmental satellite GEOS-13 [6], which recognized cloud features, after which the cloud height was estimated using linear regression. The classification result was satisfactory and affected by the quality of the satellite image.

In the article [7], the authors created a classification model based on such supervised method as K-nearest neighbor method and genetic algorithm, which allows to detect such cloud classes, as dense dark cloud, dense white cloud, and cirrus cloud. The SWIMSAT satellite image categories database was used to verify the results. Cloud image classification method presented by Chufu Y. et al. [8], uses the random forest method and FengYun-4A (FY-4A) satellite images. As a result of the study, it was found that the random forest method shows a much better result than the K-nearest neighbor and back-propagation neural network methods, and the models using satellite images of all spectral channels also showed a better result. The method of support vectors was proposed to solve the problem of classification of satellite cloud images [9], where the images were divided into smaller parts, for each of which the characteristics of brightness and grey level were recognized. The results of the study showed that the accuracy of cloud detection on satellite images is more than 90%.

Algorithms using artificial neural networks work like the human brain and perform functions such as understanding, learning, problem-solving, and decision-making. Different types of artificial neural networks are convolutional neural networks, feed-forward neural networks, probabilistic neural networks, time-delay neural networks, radial basis function networks, and recurrent neural networks [10]. The convolutional neural network is mostly used for solving the problem of image classification.

In the article [11], the authors developed an artificial neural network Deep Neural Network Cloud-Type Classification (DeepCTC) for the classification of cloud types on satellite images and the determination of the precipitation amount. The results showed that the model can distinguish well such cloud types as high stratus, high cumulus, cumulus, low, and high clouds. However, the determination of low-level clouds remained a problem for the developed model. For the recognition and classification of ground cloud images, the authors of the article Chang J. et al. [12] developed a convolutional neural network CloudNet, which can classify 11 types of clouds. This neural network is an improved version of AlexNet and consists of 5 convolutional layers and 2 fully connected layers. CloudNet takes a set of fixed-size RGB images and outputs a sequence of prediction labels representing the probability of each category. However, the main drawback of the considered artificial neural networks is that the network analyzes each pixel separately but does not consider the whole image of each cloud and its shape. At the same time, convolutional neural networks analyze and determine the type of cloud on the entire image, ignoring the pixel-by-pixel characteristics of clouds. Another disadvantage of using neural networks is the problem of selecting many representative training data sets, which requires expert knowledge and is time-consuming. In our work, we decided to develop a convolutional neural network to classify cloud types on satellite images, as the use of artificial neural networks is still the most effective approach with a carefully selected network architecture.

Practical implementation. The EarthData service [13] was chosen for downloading and processing satellite images of clouds, which allows us to receive an image captured by the NOAA-20 Visible Infrared Imaging Radiometer Suite (VIIRS) satellite, which collects data by combining visible and infrared spectral ranges. To solve the problem of classification of cloud types on satellite images, a set of cloud images in RGB format of different sizes, belonging to the following four classes, was prepared:

- Cirrus – high-level clouds, which have fibrous texture and from light gray to white tone in visible images, and white tone in infrared images. This type of cloud often occurs in vertical motions of large-scale weather patterns.

- Cumulonimbus – clouds with vertical development, which produce big amount of precipitation, and usually have bright white color, well-defined contours, and a plain texture.

- Cumulus – low-level clouds, which can be described as puffy cotton-shaped, have light grey or grey color on infrared images, and light grey or white color on visible images.

- Stratocumulus – low-level clouds, which are characterized by a rounded shape, a diameter of 10 to 100 km, a white tone in the center to a light gray tone on the periphery on visible images, and from light gray to dark gray color on IR images.

This paper proposes a convolutional neural network model, which accepts as input images of given classes with a size of 160 x 160 x 3, where 160 x 160 are the dimensions of the image, and 3 is the number of channels (red, green, blue). The prepared training images were augmented in several ways: the values of all pixels were divided by 255 to obtain values within range [0; 1] and all images were resized to 160 x 160.

The first layer in the model is a convolutional layer, which is the core layer in CNN, and accepts an input image of given dimensions also called a tensor, with 64 filters of size 2 x 2. Dimensions of the filter are the size of the filter applied to the input tensor that performs convolutional operations and produces a feature map. Other hyperparameters of the convolutional layer are stride, which is the number of pixels by which the filter moves after each operation and is equal to 1 by default, and padding, which supplements the input image with a certain number of zero values from each edge. In our case, we do not use padding so parameter value on this step is p=0. We used Rectified Linear Unit (ReLU) function as an activation function after the convolutional layer, which transforms the input value into a positive value. After the convolutional layer, there is a max pooling layer with a filter size of 2 x 2, the purpose of which is to reduce the dimensionality of the data while preserving the most important characteristics by forming a dependency between several neurons of the previous layer with a certain element of this layer. The average pooling layer used in image classification can miss some image features, so we decided to use max pooling layer, which is able not only to reduce the dimensionality of the image but also remove noise and keeps the key features of the image. After that, the model has combinations of convolutional and max pooling layers, but with a larger number of filters – 64 and 128. The last layers of the model are fully connected layers, which receive the flattened output of the last pooling layer. In this step, we used the SoftMax activation function, which is usually used in the last levels of multiclass classification, because this function considers all the values from the previous network layer, which allows you to calculate the probability for each class.

During model training, the cross-entropy function, as a loss function, was used to calculate the difference between the value of the training data and the predicted results. For optimization, the adaptive moment estimation (Adam) was chosen, which is a combination of the RMSprop algorithm and stochastic gradient descent [14].

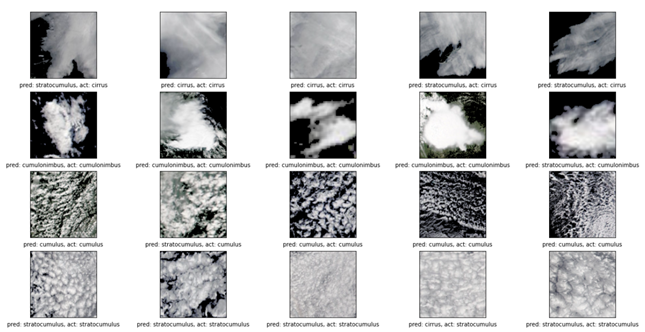

The model was trained using preprocessed images of 4 classes – cirrus, cumulonimbus, cumulus, stratocumulus, which were divided into training and testing data sets. The proposed network was trained on 20 epochs and showed an accuracy result of 95% on the training data and 85% on the validation data. During training, the accuracy value, and the loss value at each stage were calculated. The cloud image classification results, namely the actual cloud class and the predicted one, are shown in Figure 1.

To calculate the accuracy of the model we calculated the following metrics using the test dataset: Accuracy, Recall and F1-Score. The values of the proposed metrics for image classification of each class are shown in Table 1.

Table 1

The proposed model performance metrics

| Image class | Accuracy | Recall | F1-Score |

| cirrus | 0.4 | 0.4 | 0.571429 |

| cumulonimbus | 0.8 | 0.8 | 0.888889 |

| cumulus | 0.8 | 0.8 | 0.888889 |

| stratocumulus | 0.8 | 0.8 | 0.888889 |

| All classes | 0.7 | 0.7 | 0.712302 |

From the above metrics calculation results, it can be concluded that the cumulonimbus, cumulus, and stratocumulus image classes are defined well enough – their accuracy is 80%. The worst model determines the image of the cirrus class (accuracy – 40%), which affects the overall result of model accuracy – the accuracy for all classes is 70%.

To verify the effectiveness of the proposed convolutional neural network, we decided to apply common supervised classification methods to determine the types of clouds and to evaluate their accuracy. The following classification methods were used, which are suitable for classifying both ordinary data and images: the Random Forest algorithm, K-Nearest Neighbors, and the Decision Tree algorithm.

After comparing the results of supervised classification algorithms and the proposed convolutional neural network model, we have concluded that the proposed model has better estimation accuracy for all classes, which was to be expected, because the CNN considers more parameters for predicting the image class. The results of the comparison of the used algorithms are shown in Table 2.

Table 2

Metrics comparison of the used algorithms

| Algorithm | Accuracy | Precision | Recall | F1-Score | Execution time, s |

| Random Forest | 0.585 | 0.72 | 0.84 | 0.72 | 17.7 |

| K-Nearest Neighbours | 0.35 | 0.87 | 0.35 | 0.45 | 5.2 |

| Decision Tree | 0.54 | 0.63 | 0.54 | 0.53 | 59.2 |

| Proposed CNN | 0.7 | 0.79 | 0.7 | 0.712302 | 836.4 |

Conclusions. The task of recognizing and classifying satellite images of cloudiness is a relevant topic for research because such information is widely used in the detection of atmospheric events, meteorological monitoring, and prediction of weather and precipitation changes. The neural networks approach for detection and classifying cloud images is gaining popularity and allows solving the problems of processing complex images and a large amount of data. The most effective and universal method for solving the image classification problem is a convolutional neural network, which can be also used for the classification of cloud types on satellite images.

During the research, we prepared the input dataset of images captured by the NOAA-20 Visible Infrared Imaging Radiometer Suite (VIIRS) satellite, which were divided into 4 classes: cumulus, cumulonimbus, cirrus, and stratus. The proposed convolutional neural network showed good results: cumulus, cumulonimbus, and stratus cloud types were identified with 80% accuracy, but cirrus is still hard to recognize for model – its accuracy was only 40%. Comparing the results with other supervised methods, such as Random Forest, K-Nearest Neighbors, and Decision Trees algorithms, we detected that the proposed convolutional neural network has much better accuracy in the classification of cloud types.

References:

- Ball, John & Anderson, Derek & Chan, Chee Seng. (2017). A Comprehensive Survey of Deep Learning in Remote Sensing: Theories, Tools and Challenges for the Community. Journal of Applied Remote Sensing. doi: 10.1117/1.JRS.11.042609.

- Dwi Prabowo Yuga Suseno & Tomohito J. Yamada (2012). Two-dimensional, threshold-based cloud type classification using MTSAT data, Remote Sensing Letters, 3:8, 737-746. doi: 10.1080/2150704X.2012.698320

- Gacal, Glenn Franco & Lagrosas, Nofel. (2016). Cloud classification based on histogram analysis of pixel values of night time cloud images over Manila Observatory (14.64N, 121.07E).

- Li M, Wang L, Deng S, Zhou C (2020) Color image segmentation using adaptive hierarchical-histogram thresholding. PLOS ONE 15(1): e0226345. URL: https://doi.org/10.1371/journal.pone.0226345

- Li M, Wang L, Deng S, Zhou C (2020) Color image segmentation using adaptive hierarchical-histogram thresholding. PLOS ONE 15(1): e0226345. URL: https://doi.org/10.1371/journal.pone.0226345

- G. Rudrappa and N. Vijapur, “Cloud Classification using K-Means Clustering and Content based Image Retrieval Technique,” 2020 International Conference on Communication and Signal Processing (ICCSP), 2020, pp. 0700-0704, doi: 10.1109/ICCSP48568.2020.9182211.

- Rajini, S. & Tamilpavai, G.. (2018). Classification of Cloud/sky Images based on kNN and Modified Genetic Algorithm. 1-8. doi: 10.1109/I2C2SW45816.2018.8997159.

- Y. Zhai and X. Zheng, “Random Forest based Traffic Classification Method In SDN,” 2018 International Conference on Cloud Computing, Big Data and Blockchain (ICCBB), 2018, pp. 1-5, doi: 10.1109/ICCBB.2018.8756496.

- Pengfei Li, Limin Dong, Huachao Xiao, Mingliang Xu, A cloud image detection method based on SVM vector machine, Neurocomputing, Volume 169, 2015, Pages 34-42, ISSN 0925-2312. URL: https://doi.org/10.1016/j.neucom.2014.09.102.

- P. Vadapalli. 7 Types of Neural Networks in Artificial Intelligence Explained. 2020. URL: https://www.upgrad.com/blog/types-of-neural-networks/

- Afzali Gorooh, V.; Kalia, S.; Nguyen, P.; Hsu, K.-l.; Sorooshian, S.; Ganguly, S.; Nemani, R.R. Deep Neural Network Cloud-Type Classification (DeepCTC) Model and Its Application in Evaluating PERSIANN-CCS. Remote Sens. 2020, 12, 316. URL: https://doi.org/10.3390/rs12020316

- Zhang, J. L., Liu, P., Zhang, F., & Song, Q. Q. (2018). CloudNet: Ground-based cloud classification with deep convolutional neural network. Geophysical Research Letters, 45, 8665– 8672. URL: https://doi.org/10.1029/2018GL077787

- Earth Data Worldview. URL: https://worldview.earthdata.nasa.gov/

- Yamashita, R., Nishio, M., Do, R.K.G. et al. Convolutional neural networks: an overview and application in radiology. Insights Imaging 9, 611–629 (2018). URL: https://doi.org/10.1007/s13244-018-0639-9