Yurii Rubel

student, department of software,

Institute of Computer Sciences and Information Technologies,

Lviv Polytechnic National University, Lviv, Ukraine

yurii.rubel.mnpzm.2022@lpnu.ua

Andriy Fechan

doctor of technical sciences, professor, department of software,

Institute of Computer Sciences and Information Technologies,

Lviv Polytechnic National University, Lviv, Ukraine

andrii.v.fechan@lpnu.ua

Nataliya Fechan

teacher, Separate structural subdivision Lviv Professional College of Transport Infrastructure,

Lviv Polytechnic National University, Lviv, Ukraine

natafechan@gmail.com

Abstract

Due to the growing popularity of distance and blended learning, adaptive testing is gaining more and more popularity in the educational process. The article discusses an individualized approach to testing that will help solve the problem of the obsolescence of traditional testing methods. The research also examines the role of adaptive testing in modern education.

The adaptive testing system offers an innovative approach to knowledge assessment, namely the collection and analysis of user response data, which allows the system to select the optimal level of test difficulty for each participant.

This article presents the architecture of a software adaptive testing system based on the use of software agents that can be easily developed as an extensible system that implements the process of computerized adaptive testing (CAT). The basis of adaptive testing is the CAT process itself, whose components will be implemented as software agents.

A prototype of a software system for adaptive testing for the mobile application “Вир Історії” (History Vortex) is presented. This prototype includes the implementation of adaptive testing based on the CAT process using a one-parameter Rasch model.

To realize the future improved adaptive testing, a hybrid approach to implementing the system was considered, where a combination of a one-parameter Rasch model and a feed-forward neural network (FNN) is envisaged.

Keywords: Adaptive testing, Computerized adaptive testing, Item response theory, Neural network, Mobile application, Knowledge testing, Education.

Introduction

In the field of education, there is a growing need for adaptive testing systems. Today, there are many solutions on the market for adaptive testing, but most of them require payment for their use and do not provide the opportunity to pass adaptive tests in the history of Ukraine. This is because most of the available solutions are developed abroad and do not take into account the requests of the Ukrainian market.

In turn, adaptive testing [1] is one of the variations of testing, where the questions asked during the testing process are automatically selected based on the user’s answers to previous questions. The main idea of adaptive testing [2] is to use the testing time as effectively as possible and minimize the user’s effort while increasing the accuracy of the assessment of the user’s knowledge level.

Also used is the term computerized adaptive testing (CAT) [3], which means that adaptive testing uses computer technology to individually measure the level of knowledge of each test participant in real-time and is performed using appropriate software. It is assumed that the software system will be implemented on the basis of the CAT process using the one-parameter Rasch model.

A feed-forward neural network (FNN) will be used in the further development process. Since it can take the participant’s answers as input and generate a knowledge score that will be used to select the next question. The use of a neural network will allow further development to form a hybrid approach to system implementation, which consists in combining a one-parameter Rasch model and an FNN neural network.

So, the purpose of this article is to research adaptive testing, its role in education, and to develop a prototype of an adaptive testing system for the history of Ukraine. This prototype will include the implementation of adaptive testing based on the process of computerized adaptive testing with the use of a one-parameter Rasch model.

Overview of the subject area of adaptive testing and its role in education

Adaptive testing involves modifying the assessment to take into account the test participant’s abilities. In most cases, it is the selection of questions for test participants based on the scores they received on previous questions to maximize the accuracy of measuring their level. If the test participants do well, the test questions become more difficult, if they do poorly, the questions become easier. At a certain moment in the test, the test termination criterion is met and the scoring procedure is performed.

In turn, adaptive testing can be carried out in different ways [4]. The most common and simpler option is to assign a degree of difficulty to each test question. Another common option is when questions can be related to certain topics or categories. In this case, if the user makes a mistake, the next question will be on the topic they know less well. And if the test participant answers the question correctly, he or she is offered a question on a different topic.

When it comes to adaptive testing, it is worth mentioning the item response theory (IRT) [5], which is essentially an approach to analyzing test data. In IRT, adaptive testing is based on the idea of selecting tasks of a certain difficulty for each user, taking into account the parameters of the level of knowledge and complexity of the tasks. IRT itself allows defining the parameters of answers to individual questions, such as the complexity of the questions and the level of user knowledge. In adaptive testing based on IRT [6], each question has its own level of difficulty, which is determined by the user’s knowledge level parameter, which reflects the probability of a user answering the question correctly according to his or her level of knowledge. Therefore, the main task of adaptive testing based on IRT is to obtain an objective assessment of the latent parameter of the user’s knowledge level.

Next, it is worth considering the concepts of the IRT model that can be used in the implementation of CAT. The IRT model [7] is a mathematical model used to assess a respondent’s (user’s) ability to complete tasks based on their responses to these tasks. In the context of CAT, IRT models [8] are used to automatically select the next task depending on the user’s previous answers. This reduces the number of tasks required to achieve sufficient measurement accuracy and reduces the overall test time.

In turn, the research on adaptive testing in education focuses on improving the methods of assessing pupils’ and student’s knowledge using innovative technologies. The role of adaptive testing in education is great and important. Here are some key aspects of researching the role of adaptive testing in education:

- Individualization of testing: Adaptive tests allow each participant to take a test that is based on their answers to previous questions. This also contributes to a more accurate assessment of knowledge and skills.

- Efficiency: Adaptive testing allows you to effectively determine the level of student knowledge while reducing the time and volume of testing, as the system automatically adapts to the answers of the test participant.

- Stimulating self-study: Adaptive testing can stimulate pupils and students to prepare for self-study as they focus on identifying their own weaknesses and improving them.

- Personalized learning: The results of adaptive testing can be the basis for developing individualized learning plans and adjusting the pedagogical process for each pupil or student.

- The newest technologies and data analysis: Research in this area also focuses on the use of neural networks and data analytics to improve the efficiency and accuracy of testing. Adaptive testing aims to make the testing process more individualized and efficient.

Research results

Therefore, a prototype of the adaptive testing software system was developed, which is based on the CAT process using the one-parameter Rasch model. The process of CAT working in the prototype consists of an architectural solution based on the layout of technical elements for its construction (Fig. 1), according to a method based on an iterative algorithm [9-10]. Five components are required for CAT to work:

- A calibrated item pool is a set of test questions with different difficulty levels and parameters.

- The starting point is the initial level of user knowledge, which is determined at the beginning of the test.

- A subject selection algorithm is a method of selecting the next question for a user based on his

- The scoring procedure is a method of calculating the user’s points based on his answers and question parameters.

- The test termination criterion is the condition under which testing is terminated.

Fig. 1. The CAT workflow

In turn, such a type of CAT as stradaptive (stratified adaptive) was used [11-12]. In stradaptiv testing, a bank of test questions is used, which is divided into levels of difficulty, so if the user answers a question correctly, the next question is taken from the higher level of the test question bank, and if the user answers incorrectly, from the lower level. Fig. 2. shows the scheme of presentation of test questions in stradaptive testing, where the bank of test questions is divided into three levels of difficulty: low, medium, and high levels.

Fig. 2. Diagram of the algorithm for presenting test questions in stradaptive testing

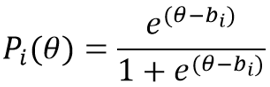

In the prototype, the one-parametric Rasch model – 1 Parametric Logistic Latent Trait Model (1PL) [13] was used to select the next question, i.e., to perform the function of the subject selection algorithm. The Rasch model includes two main parameters: the latent parameter of the test participant’s knowledge level (θ) and the parameter of the task difficulty (b). To determine the probability of a correct answer to a question, formula (Fig. 3) is used, which depends on the difference between the participant’s level of knowledge and the complexity of the question.

Fig. 3. Formula of the one-parameter Rasch model

Now let’s take a closer look at each component of the CAT process in the prototype of the adaptive testing system. In this case, the calibrated pool of items is a bank of test questions that are placed on the server in the database (DB) of the mobile application “Вир Історії” (History Vortex) in the form of a corresponding relation (table, collection). Since the CAT process is a variant of stratified adaptive testing, the calibration of the element pool is carried out according to the levels of difficulty, which are three: low, medium, and high. Accordingly, each test question in the item pool has its own level of difficulty. Each question is also assigned a certain category (topic), since the test is conducted in the history of Ukraine and the questions are from different historical periods and topics. Therefore, the pool of items has two calibrated parameters, which are used in the subsequent stages of the CAT process, namely during the item selection algorithm, i.e. during the selection of the next item in the testing process and during the scoring procedure.

The starting point of the test, in turn, uses several methods to determine the initial level, namely:

- The ability to define the initial level by the user, i.e. the user can choose the difficulty of the first question and its category at the beginning of the test.

- Automatic determination of the initial level as medium, which means that the first question will be of medium difficulty, and its category will be selected randomly, this is used if the user does not choose the difficulty level, since the ability to choose the difficulty level is optional.

- Determination of the initial level based on the previous results of the adaptive test, this feature, like the rest of the previous ones, is optional.

In the prototype of the adaptive testing system, the algorithm for selecting an item (task) is performed using a one-parameter Rasch model. The one-parameter Rasch model allows to determine the probability of a correct answer to a question, which depends on the difference between the participant’s knowledge level and the difficulty of the question, the difficulty of the task is based on the corresponding question parameter from the calibrated pool of items. Then, according to the user’s abilities, based on his previous answer and the parameters of the calibrated pool of items, the optimal question is selected in terms of difficulty and subject to the topic of the task (question).

The scoring procedure includes the following steps:

- Each task (question) has its own specific significance score, according to its level of difficulty.

- When the user answers each task, the system assesses whether the answer is correct or incorrect. One point is given for each correct answer, and zero points for each incorrect answer, i.e. a dichotomous approach to assessing tasks is used, where they have only two possible points.

- Further, tasks with higher significance scores are assigned to users who show a higher level of knowledge, while tasks with lower significance scores are assigned to users who show a lower level of knowledge.

- A user’s total score is calculated by adding up the scores for all the questions they have answered. This score is used to compare the level of knowledge between users or to make decisions about the next steps in the testing process. The user’s total score is also used as a test result and displayed to the user.

The CAT process also uses a test termination criterion based on a combination of factors. One of these factors is achieving accuracy in measuring user knowledge. In this case, the testing stops when the user is able to give correct answers to three questions for each of the difficulty levels. The termination criterion is also based on reaching the error limit, if the user provides incorrect answers to three questions in a row, the test is over. This helps to avoid continuing the test if the user does not demonstrate the necessary level of knowledge to successfully complete the tasks. In addition, the maximum number of questions is set, after which the test automatically stops. This allows you to limit the duration of the test and ensure efficient use of resources.

So, having dealt with the CAT components in the prototype of the adaptive testing system, it’s time to move on to the agent-based approach. Program agents in the context of adaptive testing software can be separate program modules that perform specific tasks or stages of the CAT process. Each program agent follows a common program interface and has defined responsibilities. Examples of such program agents:

- A program agent for interacting with the calibrated item pool: This agent is responsible for communicating with the test question bank, retrieving the necessary data from the pool, and transferring it for further use in the testing process.

- The program agent determines the initial level for the user: This agent analyzes previous user responses or other data to establish a baseline of knowledge and skills for each user before testing begins.

- A program agent for selecting the next task in the testing process: This agent decides which task or question to send to the user based on their previous answers, dependencies, and algorithms used in the system.

- The program agent performs the scoring procedure and determines the test termination criterion: This agent is responsible for processing the user’s answers, calculating the scores, and making a decision about the end of the test based on the specified criterion.

These agents are independent modules that work together in an adaptive testing system, performing their defined functions and communicating with each other to achieve the goals of the CAT process. Fig. 4. shows the sequence of execution of software agents for the CAT process.

Fig. 4. Sequence of execution of program agents for the CAT process

Thus, the use of program agents allows for unifying the construction of components used in an adaptive testing system. In addition, this approach ensures the extensibility of the software, since to add a new stage to the CAT process, it is enough to develop a new program agent that will correspond to the general program interface.

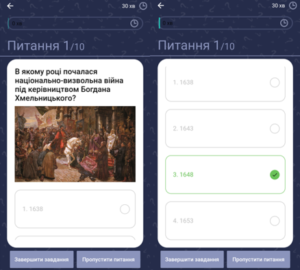

The mobile application “Вир Історії” (History Vortex) also tested the existing prototype of the adaptive knowledge testing program system, namely, a corresponding task on the history of Ukraine was designed using the functionality of the prototype, namely the CAT process and the one-parameter Rasch model. Adaptive testing of knowledge on the history of Ukraine based on the prototype is shown in Fig. 5.

Fig. 5. An example of adaptive testing in the mobile application

Further implementations

In the future, it is planned to implement the FNN neural network to form a hybrid approach to the implementation of the program system. The hybrid approach to implementing the adaptive testing system will combine the one-parameter Rasch model and the FNN neural network.

A one-parameter Rasch model will be used to determine the complexity of the questions and assess the participant’s knowledge level based on their answers. The model takes into account the relationship between the level of knowledge of the participant and the complexity of the question itself and allows to estimate the level of knowledge of the participant based on his answers to a set of questions.

A feed-forward neural network [14] will be used to adapt the test to the needs of each participant. The neural network takes inputs, such as the participant’s answers and question characteristics, and generates an estimate of the participant’s knowledge level. This score is used to select the next question that best matches the participant’s current level of knowledge. As the test progresses, the neural network updates its weights and parameters, which helps to improve the adaptation of the test to a particular participant.

The main advantage of the hybrid approach using the one-parameter Rasch model and the FNN neural network is that this combination allows you to combine the advantages of both methods. The Rasch model provides an assessment of the level of knowledge based on the participant’s answers, while the neural network helps to select the best questions to adapt the test.

After the implementation of the hybrid approach, it is planned to integrate the adaptive testing program system into the mobile application “Вир Історії” (History Vortex) through the Application Programming Interface (API) [15]. The main purpose of this integration is to ensure uninterrupted communication and data exchange between the adaptive testing system and the mobile application. In general, the API integration will allow the adaptive testing software system to easily interact with the mobile application and enable users of the mobile application to pass adaptive tests, the functionality of which is implemented in the adaptive testing system.

In addition, on the basis of the mobile application “Вир Історії” (History Vortex), it is possible to develop an infrastructure of mobile applications for testing knowledge in other subjects, using the adaptive knowledge testing system for these mobile applications as well.

Conclusions

In this research, the role of adaptive testing in modern education was considered, and the subject area of CAT was analyzed. Based on this, it became possible to develop a prototype of an adaptive testing program system.

As a result, the proposed solution in this article is to develop a prototype of a program system for adaptive testing in the history of Ukraine, which is based on the approach of implementing the CAT process using the Rasch model to select a new task according to the user’s abilities. This, in turn, will make testing more effective and individualized for each participant.

This system is based on the architecture of software agents, which facilitates easy extensibility and unification of the system. In turn, each software agent is responsible for a specific CAT component. Also, for further development, it is proposed to use a hybrid implementation approach for the adaptive testing system, which is a combination of a one-parameter Rasch model and an FNN neural network.

Also, a prototype of the adaptive testing system in a mobile application was tested, which showed the significance of this research and the prospects for further improvement of the adaptive testing system. In addition, it is planned to integrate the adaptive testing program system into the mobile application “Вир Історії” (History Vortex) using API-based system integration.

References

- Fedoruk, P. I. Adaptive tests: general provisions. Mathematical Machines and Systems. 2008. №1. Century. 115-127.

- Fedoruk, P. I. (2008). The Use of Adaptive Tests in Intelligent Knowledge Control Systems. Artificial intelligence. 2008.

- Meijer R. R., Nering M. L. Computerized Adaptive Testing: Overview and Introduction. Applied Psychological Measurement. 1999. Vol. 23, no. 3. P. 187–194.

- Wim J. van der Linden, Cees A.W. Glas. Computerized Adaptive Testing: Theory and Practice. – Dordrecht, The Netherlands: Kluwer, 2000. – 323 p.

- Wim J. van der Linden. Item Response Theory. Encyclopedia of Social Measurement. 2005. P. 379–387.

- Benton T. Item response theory, computer adaptive testing and the risk of self-deception / Tom Benton // Research Matters: A Cambridge University Press & Assessment publication. – 2021. – 32 – P. 82-100.

- An Introduction to Item Response Theory for Patient-Reported Outcome Measurement / T. H. Nguyen et al. The Patient – Patient-Centered Outcomes Research. 2014. Vol. 7, no. 1. P. 23–35.

- Ogunsakin I. B. Item Response Theory (IRT): A Modern Statistical Theory for Solving Measurement Problem in 21st Century / Isaac Bamikole Ogunsakin, Yusuf Shogbesan // International Journal of Scientific Research in Education. – 2018. – Vol. 11(3B). – P. 627–635.

- Oppl A flexible online platform for computerized adaptive testing / Stefan Oppl, Florian Reisinger, Alexander Eckmaier, Christoph Helm. // International Journal of Educational Technology in Higher Education. – 2017. – Т. 14, № 1.

- Komarc M. Computerized Adaptive Testing In Kinanthropology: Monte Carlo Simulations Using The Physical Self Description Questionnaire: автореф. Extended Summary Of Doctoral Thesis / Komarc Martin // Charles University. –

- Voitovych, I. S. “Use of adaptive testing in the educational process of a higher educational institution” / Ihor Stanislavovych Voitovych, Anastasiia Anatoliivna Ivashchenko // Scientific publications of the FMF of the Central State Pedagogical University, Scientific Notes. – Issue 6. – Series: Problems of Methods of Physical, Mathematical and Technological Education. – 2014. – Vol. 2, No. 6.

- Lendyuk, T. V. “Modelling of Computer Adaptive Learning and Testing” // Proceedings of the Odessa Polytechnic University. – 2013. – № 1. – P. 110-115.

- Wim J. van der Linden. Handbook of Item Response Theory: Three Volume Set / Wim J. van der Linden. – Chapman and Hall/CRC, 2018. – 1500 p.

- Maynard M. Neural Networks: Introduction to Artificial Neurons, Backpropagation and Multilayer Feedforward Neural Networks with Real-World Applications / Morgan Maynard. – Independently published, 2020. – 53 p.

- Dudhagara D. C. R., Joshi M. A. API Integration in Web Application. International Journal of Trend in Scientific Research and Development. 2017. Volume-1, Issue-2.