Daniela Pucicov

Abstract

With humankind continuously taking faster and deeper steps into the digital era, with devices accompanying both our professional and personal life 24/7, becoming a victim of cyber attacks is becoming more probable every day. This is why cybersecurity is particularly important. It encompasses everything that pertains to protecting our sensitive data, personally identifiable information, protected health information, personal information, intellectual property, data, and governmental and industry information systems. But cybersecurity systems have their flaws, especially when it comes to the human factor. This article aims to present the risks of cognitive biases in cyber security and ways to overcome the potentially catastrophic errors they may cause.

Key words: Cyber-security, cognitive bias, errors, human factor

Introduction

The human brain is exposed to vast amounts of information on a daily basis. Processing all the information, taking decisions, and making judgements are activities highly demanding of energy. As an attempt to simplify information processing, our brain has the tendency to create patterns and systems which can often result in cognitive biases – systematic errors or deviations from rationality in judgment.[1] According to Cherry[2], cognitive biases can be related to memory (how and what we remember) or to limited attention (because the “full-attention mode” is a scarcity, our brains become selective about the things they pay attention to). The cognitive biases can be a “fault” of any person, now that they are “inherent characteristics of human nature”[4].

This article aims to draw attention to the human factor in the occurrence of cyber security errors, specifically the cognitive biases effect on security decision-making performed by people.

Cognitive biases and cyber security threats

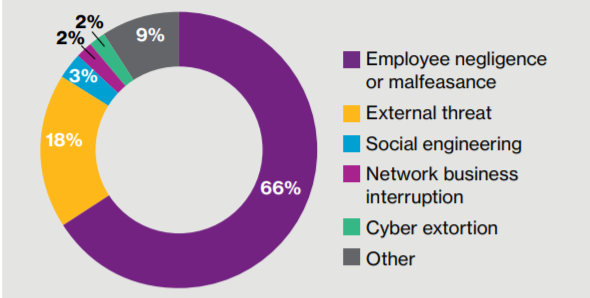

As studies have shown, many of the occurring cyber security issues are happening because of cognitive biases. According to Willis Towers Watson Cyber Risk Culture Survey[4], 66% of cyber breaches are attributed to human errors (see Figure 1). Attackers target cognitive bias in combination with a system’s technical flaws to exploit it successfully, or a better explanation of the discussed problematic, we shall take a look at some specific cognitive biases examples and how they impact cyber security:

Figure 1. Percentage of claims by breach. Source: Willis Towers Watson Cyber Risk Culture Survey

- The Ostrich effect is the tendency towards avoidance of potentially unpleasant information or situations perceived as negative. In an organization’s cyber security environment this could mean ignoring statistics or news about cyber-attacks on organizations, or not taking into consideration security recommendations and best practices because of the company’s unwillingness to spend extra capital or the employee’s indisposition towards making the extra effort[3].

- The optimism bias is the “illusion of invulnerability”[6], in other words, the tendency to believe that we are less likely than others to find ourselves in the negative circumstances others are experiencing. It is the perception of individuals that their risk of information security attacks, such as phishing, to be lower than others. This also extends to organizational contexts, where individuals believe their own organization to be at relatively lower risk to information security threats than other competitor organizations. If individuals are optimistically biased regarding information security threats, they may not take the precautionary measures to reduce risks, which will in turn lead to increased vulnerability.

- Confirmation Bias consists of a tendency to search for, interpret, favor, and recall information in a way that confirms or strengthens one’s prior personal beliefs or hypotheses[5]. Confirmation Bias can trick the mind into only looking for specific issues related to an IT infrastructure, based on one’s previous experience and understanding, instead of considering security as a whole. When security professionals are exploring a theory for a particular problem, they are highly susceptible to confirming their beliefs by only searching and finding support for their hunch. For example, an experienced security analyst may “decide” what happened prior to investigating a data breach, assuming it was a malicious employee due to previous events. Expertise and experience, while valuable, can be a weakness if people regularly investigate incidents in a way which only supports their existing belief.

- Availability heuristic appears when decision makers rely too much on the recent trends or the information which comes to mind first . In the case of an attack, if the focus is only given to a specific issue rather than considering the security as a whole, then the availability heuristic is in place. Even though taking the necessary actions to prevent the trending attacks is critical, it is equally important to continue with other security considerations.

- Fatalistic thinking refers to an outlook where individuals may believe they have no power to influence risks personally, as risks are controlled by external forces. This cognitive bias stems from misinterpretations of risk and can lead to unwise behavioral consequences. In information security, this might mean believing there are no measures one can take to prevent a phishing attack, because it’s “something that will happen anyway”. Likewise, there is some people’s tendency to believe that everything is ‘hackable’ and therefore there should be no point in protection efforts. This feeling may augment with home working, as employees are distanced from usual organizational support.

Conclusion and recommendations

As highlighted at the beginning of this article, the incidence of human factor errors (oftenly caused by cognitive biases) is significantly high. The biases we discussed are solely a small sample of how the cybersecurity industry is shaped by human decision making[7]. To address the impact of cognitive bias, it is necessary to focus on understanding people and how people make decisions at the individual and organizational level in the cybersecurity industry.

The crucial requirement from a company to minimize the incidence of cognitive biases is to acknowledge their presence and find where specifically do they occur. Aside from the fact that companies must continually train their employees in information security and provide simulation exercises on a regular basis, it is important to raise awareness of common cognitive biases across agencies and within security teams to better identify situations where critical decisions are susceptible to the negative impact of mental shortcuts.

By improving the understanding of biases, it becomes easier to identify and mitigate the impact of flawed reasoning and decision-making conventions. More tangibly, understanding user behavior at the individual level can also help minimize the degree to which cognitive biases drive an agency’s security posture.

References

- [1] HASELTON, M.G. et al. The evolution of cognitive bias. In The handbook of evolutionary psychology . 2015. s. 1–20. .

- [2] CHERRY, K. – MORIN, R.A. How Cognitive Biases Influence How You Think and Act. In . 2019. s. 10. .

- [3] MATUŠOVIČ, M. et al. Protection of Competition – the Global Economic Crisis VI. . Mukařov – Srbín: Ľuboš Janica, 2018. 190 s. ISBN 978-80-270-1037-0.

- [4] MONEV, V. Cognitive biases in information security causes, examples and mitigation. In Cyber Security Review . 2018. s. 7. .

- [5] NICKERSON, R.S. Confirmation bias: A ubiquitous phenomenon in many guises. In Review of General Psychology . 1998. no. 2 (2), s. 175–220. .

- [6] SAM, J. How Cognitive Biases can impact the cybersecurity of your organization. In . 2020. .

- [7] VESELÝ, P. Application of the rules of BYOD in the enterprise. In Management in theory and practice [elektronický zdroj] . Praha: Newton College, 2016. s. 407–410. ISBN 978-80-87325-08-7.

Celé vydanie časopisu Manažérska Informatika ročník 1, 2020, číslo 2

Indexed : GOOGLE SCHOLAR